In our previous article, Introduction to VR, we reviewed the history of Virtual Reality and the origins of some of the most prolific ideas around experiencing a virtual world. We also talked about some of the early technologies that were developed by genius-level people that date back to the 1800’s where the first VR Technology was conceived of.

In that article, we traced the history of VR up to the modern-day when the whole industry was changed forever by a boy in his parent’s garage. Palmer Luckey built the Oculus and successfully funded commercialization of the Oculus VR headset through a record-breaking Kickstarter campaign.

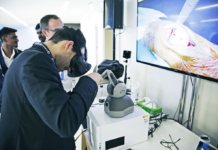

In this section, we are going to talk in more detail about the Technology behind VR today. We’ll cover the hardware, platforms, and experiences that all work together to make VR the immersive success it is today.

The Technology Behind VR

When you put a Virtual Reality Headset on you step into a different world. You can be visiting a distance planet one minute and reliving the Revolutionary War in the next. Of course, you aren’t actually in these exotic and historic locations; you’re likely standing in the middle of your living room trying to avoid hitting your coffee table or being tripped by a family member. Either way, wearing a VR headset transports you somewhere else, and the technology that enables all of this is absolutely amazing! So, what exactly is included in your average VR setup to enable these experiences? Let’s jump in and review what’s out there today!

The VR Headsets on the market today all have a similar form factor that a user wears on the front of their head. These headsets provide binocular viewing areas that consist of 2 lens and display combinations to present images to the user. The specifics around the type of lens, the viewing angle, the display resolution, and the type of display vary between VR headsets, but they all share these basic attributes.

The biggest difference between HMDs today is how content is delivered to the displays. The original Oculus and the fast follower HTC Vive were tethered systems that required a high-powered PC to process the graphics and deliver high-res and high-frequency images to the HMD. But, a growing trend, and the direction of the market is moving toward untethered and fully standalone VR HMDs. The Oculus Quest is the standout product in this space, and we are likely to see many more of similar quality and capability going forward.

Another category of VR HMDs worth mentioning is smartphone-powered VR. These devices have very limited capabilities when compared to the higher-end devices like the Rift, Vive, or Valve Index. They are able to display 360 video, but the are not able to render 3D scenes that a user can interact with. The tracking systems on the smartphone-powered VR HMDs are also slower and less accurate. But for viewing 360 video, smartphone VR HMDs can get a pass on beat-saber level tracking requirements.

Whether the HMD is tethered or untethered, the method of displaying VR content is similar: two closely, but not exactly, matching streams of content are sent to the headset to be displayed on one or two display panels. A set of 2 lenses that sit between the user’s eyes and the display focus the two incoming image stream and reshape it to produce an immersive visual experience. The close but not exact matching of the images being presented to the user’s eyes is what causes the stereoscopic 3D image effect. This also mirrors how people see the real world – similar but not exact images are presented to each eye which allows people to perceive depth. Even when looking at the same object, a person is still seeing 2 different images composited into one. This is because the image is being produced by eyeballs that are located a few millimeters away from each other and create their own unique viewing angle.

The VR Headset achieves the stereoscopic 3D images by offsetting the incoming images by the same distance that a person’s eyes are set in their head. The better this is done, the more immersive and convincing the VR Experience can be.

When creating VR Experiences that are immersive, remember that the user has existing sensory capabilities that must be accounted for and designed toward. The human brain’s perceptive abilities allow it consume vast amounts of data from all senses simultaneously. Additionally, the synchronization of all those sensory inputs have been refined and perfected for each individual over the course of their lifetime. What this means for designers of VR Experiences is that any presentation that delivers content to any of the human senses needs to be timed just right. A user can very quickly sense when the timing is off and and the illusion will quickly break! But, a broken illusion should be the smallest of VR Developers concerns. When there is timing misalignment with incoming sensory data, a person very quickly starts to feel sick. This is commonly referred to a motion sickness.

In simple terms, motion sickness happens when 2 or more of a persons senses don’t agree on what is being experienced. This may be an evolutionary safe guard for people to help them avoid dangerous situations, but is absolutely a guide-post for VR Developers who don’t want to lose customers. We will dive deeper into motion sickness in a latter post. We’ll walk you thought how to design experiences that will ensure your customers don’t get sick and flee your game/experience forever.

The two primary aspects of maintaining the appropriate timing that a human body are frame-rate and positional accuracy. Frame-rate is achieved by having the adequate compute power to render and deliver two high resolution images at or above 70 fps. And the frames being delivered need to accurately reflect movements that users is making in the virtual world and update to providing new high frame rate images at less than 11ms. These are the lowest settings that are deemed acceptable in the VR industry right now. Frame rates that are lower than 70 fps or latency that is higher than 11ms is said to contribute to motion sickness and breaking the VR illusion. These things are easy to avoid since headsets easily meet or exceed these metrics. As a VR Developer, you job will be to avoid unnatural locomotion and maintain user orientation and image consistency in your VR Experiences (more on that a little later).

User Tracking – Headsets & Controllers

Consumer VR systems today have technology included that a help VR Developers maintain the illusion and hopefully help users avoid getting motion sick. There are two types of of user tracking methodologies in VR. There is inside-out tracking and outside-in tracking. There are trade-off for both kinds of tracking in the VR System.

Outside-In Tracking in VR

Outside-in tracking was the first type of tracking used by early VR systems from Oculus and HTC. Outside-in tracking utilized sensors that are outside of the users headset and controller. Sensors from outside-in tracking are placed right outside the VR play area. Utilizing special markers and infrared lights emitted from the VR Headset and controllers, the sensors track the user’s movement and gestures. Outside-in tracking is far better than the alternative at tracking movement’s that are close to the users body – this isn’t the case with inside-out tracking (discussed below).

The Rift used two desk-mounted sensors that would point toward a user that would stand in an open area in front of their desk. The rift sensors detect the position of the headset and the controllers as the user moves through a pre-defined ‘play area’. The drawback to the Oculus sensor system is the blind spots that are everywhere behind the user. Anywhere the user moves that is not viewable by the sensors is not picked up by the VR system and that movement isn’t reflected in VR. A third sensor can be purchased and added to the system to provide 360 degree motion tracking. Because the Oculus sensors need to be connected to your computer via a USB cable, a user needs to snake a cable to the opposite side of the room from where the first two sensors are located. Not an ideal setup, but having three sensors in the Oculus Rift setup allows for 360 degree tracking and more immersive VR experiences.

The HTC Vive also has outside-in tracking similar in application to the Oculus Rift, but the placement of the sensors allows for larger areas to be tracked. Only two sensors are needed for the HTC Vive system. The Lighthouse sensors are placed in opposite corners of your VR Play Area and can track massive areas in full 360 degrees. Because of the placement of the Lighthouse sensors, you only ever need two. The other benefit of the Vive system is the lighthouse sensors to not need to be connected to the user’s PC. This allows more flexibility when deciding where to place them.

Inside-Out Tracking in VR

A growing trend in VR Headsets is inside-out tracking. The entire tracking technology stack for motion and position sensing is accomplished by using sensors in the headset and controllers. These inside-out tracking VR headsets typically have anywhere between 4 & 6 cameras to capture as much of the user’s surroundings as possible. The processing of the movement and position data is offloaded to the users computer in tethered VR Systems (like the Oculus Rift s) and is processed on-device for standalone (untethered) VR Systems, like the Oculus Quest.

The Oculus Quest VR Headset is a true standalone headset that achieves outside-in tracking with only the sensors and processing power that in on-device. The Oculus Quest has 4 of cameras on the headset that captures 4 distinct images of the users surrounding. These images are processed separately and used to compute the position and motion of the user. During device setup when the user creates the guardian and Play Area, the separate images are stitched together to create a single image for the user.

The drawbacks of inside out tracking are similar to the challenges that the Oculus Rift experiences with the desk-mounted sensors not capturing motion in the play area that happens behind the user. For inside out tracking there are several areas close to the users body that the sensors have a hard time picking up. In the initial software release that came with the Oculus Quest, this issue was very apparent. It took Oculus about 2 months to correct the tracking issues that shipped in the launch software. The issues was picked up by gamers that were playing games like Pavlov and Beat Saber. Both games had tracking issues, but the issues presented themselves in different context. In Pavlov the tracking issues happened when a user brought one controller close to their face to look down the scope of a weapon. In Beat Saber the issue presented itself when a user would swing at blocks that were on opisite sides of their body or when they are swinging close to their bodies.

The tracking issues were addressed with a software update the applied updated tracking algorithms that did a much better job at predicting controller movements in both games. These predictions were based more on what is likely to be the position of a controller rather than what a machine learning algorithm thought would be the position based on previous readings – which could be wildly inaccurate, especially if the tracking wasn’t properly capturing the controller movements accurately all along.

The Oculus Rift S also has inside out tracking but is able to leverage processing power that is much greater than that of the Oculus Quest. The Oculus Rift S also has the advantage of having a much higher frame rate to capture more data for each second of gameplay. However, there was also an update that the Rift S required soon after launch because it too was suffering from poor tracking in the same corner cases the Oculus Quest. The update on the Oculus Rift S was more robust and eliminated the tracking issues as far as users were able to detect.

VR Controllers

While the VR Headset is the primary component of a VR System, there are other devices that are crucial to an immersive VR Experience. Specifically, VR Controllers play a very important role in a VR System. VR Controllers are an abstraction that VR users rely on to interact with the virtual world (Abstraction is used in the context of computer technology to enable a person with eyes, hands, and ears to interact with the zeros and ones zipping around inside the CPU). In a perfect world, controllers would not be necessary in VR. The ideal controllers in VR are the user’s hands.

There are drawbacks to having hands as controllers in VR, aside from the technology for accurate hand tracking not yet being ready for commercial use. The primary drawback is the lack of feedback to the users as they interact with the digital world. When a user reaches out to grab something in VR and that object starts to move, a VR Controller is able to provide sensory feedback to the user to increase the VR illusion and enhance the immersion. If a users were to attempt the same motion with their bare hands, there is nothing that would provide the user with feedback that the virtual object actually has any other attributes other than those that were presented visually to the user.

In addition to sensory feedback being non-existing without controllers, the possible actions that can be performed with you hands is limited to what the user can remember. This isn’t the case with a VR Controller because there is a limited number of buttons and inputs that a controller offers to the user. As a result, this limiting factor in controller inputs is helpful in telling the user what is possible.

Most VR Controllers come with a few basic common features like tiggers, grip buttons, joystick, press-buttons, and capacitive touch areas for more free-form input that joystick and arm motions aren’t able to optimally track. Controllers have been getting more advanced every year since the original Oculus Rift controllers. One of the most notable advancements in controller technology has come from the Valve Index Controllers (aka Knuckle Controllers). Along with the ubiquitous wrist lanyard that all VR controllers come with (for keeping the controller attached in the even you perform a throwing motion and let go of the controller with you hand), the Valve Index Controllers also comes with a strap that fastens the controller to the palm of you hand. This configuration allows the user to fully release their grip and have an open hand in VR while still keeping the controller and it’s various inputs really accessible.

In a later section we will dive deeper into the technology behind VR Controllers. In later sections we will learn about developing VR Experiences that take full advantage of the latest VR Controller technology including the new ability to track individual fingers in VR.

This concludes Part 2 of Introduction to Virtual Reality. Congratulations on making it to the end! In Introduction to Virtual Realty Part 1 we discuss the history of Virtual Reality and the road that brought us to where we are today. In Introduction to Virtual Realty Part 2 we discuss the different technologies that are in use today by some of the leading VR Headset developers. You are now ready to move on to the next section in our series in VR Developer! You are well on your way to obtaining the knowledge and skills you need to be an expert in VR and start developing VR Games and Experiences!