Oculus first announced that they were working on hand tracking back at their OC6 event in September of 2019. Since then, the company has been working hard on getting the technology out to its customers as fast as possible. They promised the tech to be available at the start of 2020, but that took a turn and ended up getting to the developers before then. Hand tracking for consumers is available this week, and as for developers, the SDK will be launching next week.

For the consumer version of the software, users will be able to control Oculus Apps inside of the Quest. The Oculus store, the TV browser, and the Oculus home is what users will first get to control. We expect the first games to get hand tracking compatibility to come to the store at the beginning of the new year. With the SDK coming to developers by early next week, it shouldn’t take too long to incorporate it into their games and have updates rolling out.

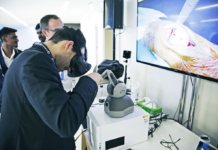

Hand tracking will engage users like never before. This enhances the ability to feel the presence of other users for social engagement, and the ability to interact with your virtual and digital worlds. This new SDK doesn’t mean that every game is going to deviate from the Touch controllers, but it will give devs the ability to implement new ways for your gamer to interact with your game, even if it is just for a few scenes.

How to get started with your new SDK

Oculus has released some notes on what will be available. To avoid us missing out on key information from their developer post, we will put their information below.

Starting next week, you can begin building hand tracking-enabled Quest applications using our updated APIs like VrAPI which will now include three hand pose functions: vrapi_GetHandSkeleton, vrapi_GetHandPose, and vrapi_GetHandMesh. Technical documentation for these updated APIs, Native SDK, and Unity Integration will also be available next week, while UE4 compatibility will be available in the first half of 2020. A new Unity sample will also be available, showing how to use OVRInput to create hand-tracking experiences.

You can check out the video of what they are explaining below.

As for publishing your apps and games with hand tracking, Oculus will start to review submissions on the first of January. This is going to make for a very busy holiday season for some developers, but a rewarding start of the year.

How hand tracking works with the Quest

Again, to ensure we don’t leave out any information that could be of value, we will be including the full Oculus paragraph on how they have made hand tracking on the Quest work. You will also be able to see the video on how developers are planning on using the Quest’s newest feature.

To make this all possible, our computer vision team developed a new method of applying deep learning to understand the position of your fingers using just the monochrome cameras already built into Quest. No active depth-sensing cameras, additional sensors or extra processors are required. Instead, we use deep learning combines with model-based tracking to estimate the location and size of a user’s hands, then reconstructing the “pose” of a user’s hands and fingers in a 3D model. And this is all done on a mobile processor, without compromising resources we’ve dedicated to user applications