At it’s annual World Wide Developers conference, Apple announced several new technologies that will enable developers to more easily take advantage of the iPhone, iPad, and Mac in developer virtual experiences.

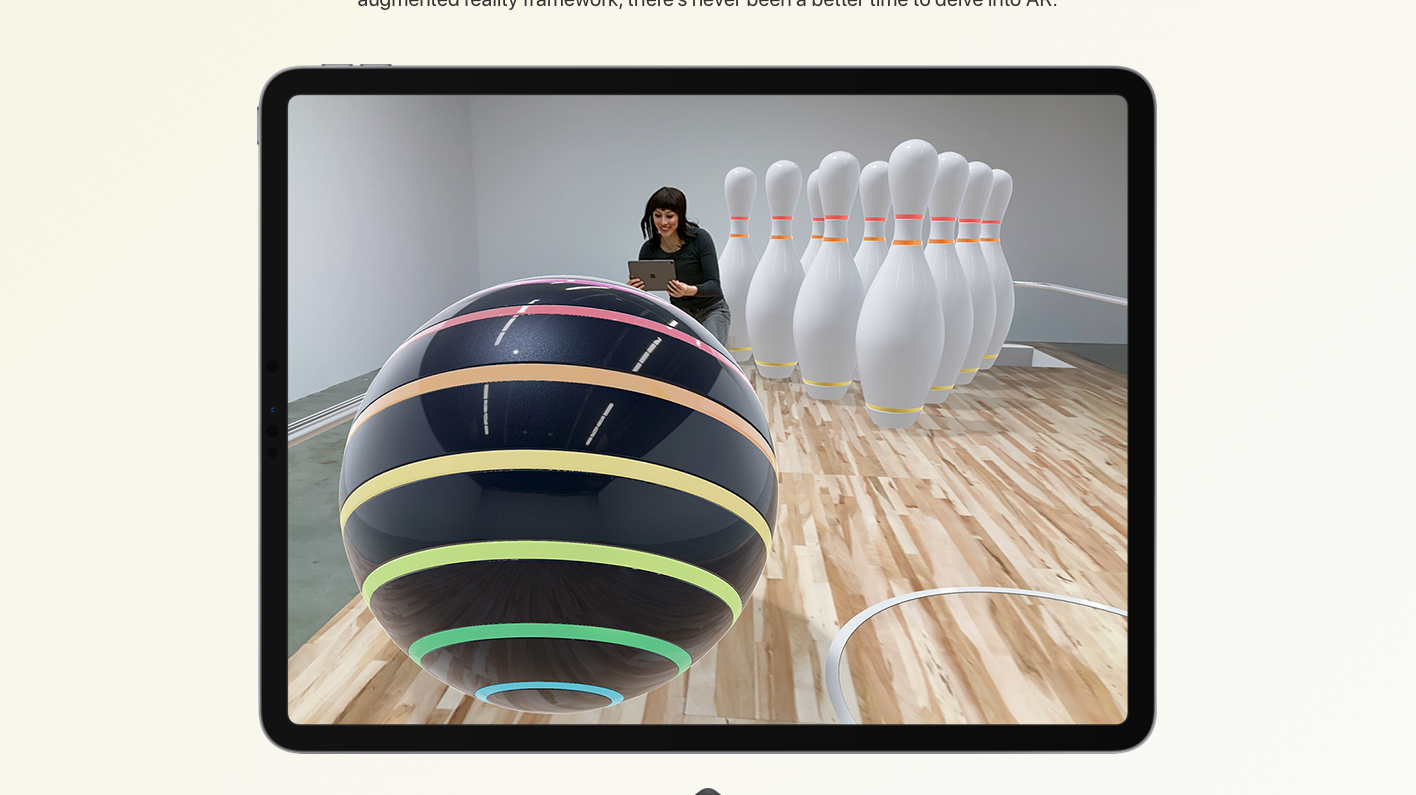

Apple has pushed Augmented Reality since the announcement of ARKit in 2017. ARKit is an SDK that enables developers to create apps that take full advantage of the iPhone and iPad hardware to overlay virtual objects in the real world.

The announcements at this year’s conference were quite significant and solved problems and added features that typically are only address by gaming and movie studios with massive budgets. And the features will be available to anyone that has an iPhone that can run the ARKit software frameworks. A phone with the A12 chip or newer – nothing newer exists today – is required to leverage the two biggest features.

Included in ARKit’s core technology has been horizontal and vertical plane detection, object detection, shadow simulation, World-Scale matching and of course anchoring objects to the real world.

Body Tracking & Motion Capture

This year Body tracking was added to the mix of technologies that developers can add to their applications. We’ve tried this out in the lab and were pretty impressed with the preliminary results. Considering body tracking usually requires a big motion capture rig and special cameras and equipment, it’s very impressive they they were able to pull off relatively accurate body tracking with only the camera on your phone!

The body tracking technology doesn’t currently track hands, but will show a representation of the skeleton frame of the person that is being tracked standing next to them.

Video

The body tracking and motion capture will enable developers to create new virtual characters with a more realistic set of movements and behaviors. It will also enable them to get to a finished product much faster.

Of course, this is a developers conference and the entire purpose is to make the developer’s life easier and to get them to adopt Apple technology. So, faster development, better development, more apps developed, and ultimately happy iPhone customers will mean more iPhone sales. Apple has a clear incentive to do this well, and so far they are continue to push AR and virtual development year after year.

While this was a rough demo on stage with some supplemental video content, Apple has been know to release updates to their ARKit mid-year so we won’t have to wait until next WWDC to see this technology improve.

Human Occlusion

Also demoed was human occlusion, which allows virtual objects in an AR scene to adapt to the people who are in the scene in real life. So instead of a virtual object being laid on top of the physical world, it becomes fully integrated with it and will lay on top or fall behind human subjects depending on where they are in the scene relative to each other.

This is a huge advancement and the way that Apple pulls it off what quite impressive. This is one of those technologies that requires the latest Apple A12 CPU to achieve, and it makes sense.

The way this is achieved is by decomposing the scene by cropping the human subjects out of the scene, then creating a new reactive composite image that has the virtual objects and the human subjects playing a game of ‘who should be in front’ as the users of the phone sees the human and virtual objects switch roles as they move around the scene. Most impressive was that this wasn’t an all or nothing implementation either. Partial visibility of the virtual object, or partial visibility of the human subject is happening as well – and this is all being updated and computed in real-time.

VIDEO

RealityKit & RealityComposer

Apple dedicated no less than 8 sessions to updates on AR/VR technology. The common thread throughout all of the sessions was the desire to make developing AR experiences approachable, easy, and almost automatic. Apple wants to solve the hard problems so that the developers can solve the creative problems. And that is exactly whaat RealityKit and Reality Composer enable.

“Architected for AR, RealityKit provides developers access to world-class capabilities for rendering, animation, physics, and spatial audio.” Apple.com

While there are other 3D animation design environments, the workflow requires expertise is several dicisplines. Apple hasn’t entirely solved that problem, and they only passingly discussed where a developer is supposed to get additional 3D assets to add to their AR Scenes, they are making the second half of the AR development workflow easier – that part that ends up with an App in the App store.

Developers will still need to bring custom 3D assets from the other places people typically get them – paid asset libraries or building custom assets. So, don’t throw away your copy of Maya or Blender just yet. You’ll still need those to export your 3D assets.

USDZ and Reality File Format

It’s worth mentioning the USDZ format that is the unsung and underutilized technology that Apple announced the first year of ARKit. To get a taste of what USDZ file format can do, go to this website with your iPhone and place the virtual objects on the table in front of you. If that isn’t impressive enough, you can send these objects via iMessage and other iPhone users can see the same model infant of them without any other software required. AR is built into iOS and the USDZ file format is what is used to package and deliver these models.

The reality files that come out of Reality Composer are also ready to open and use without any additional software. The ability to open and manipulate these files are built into macOS and iOS (and the new iPadOS). Meaning you can send a Reality file you’re working on from your Mac to an iPhone and view it without working about changing file formats. This reality file can be simply viewed with AR QuickLook or the recipient can open and edit the reality file if they want to make some edits.

In typical Apple fashion, they are is solving problems up and down the entire technology stack for their AR deployment. Which makes sense if they want to continue their typical wall-garden safe-haven they have created for their users. Hardware, Software, and services has been the battle cry of Apple for years, and their entry into the AR/VR space seems to be following the same strategy.

Other AR Advances

In addition to the topics mentioned above – and I’d recommend going to the developer.apple.com/ARkit website to get a full download via the WWDC session videos and their documentation – Apple also announced some cool new features that will be included in RealityKit and ARKit 3:

Simultaneous Front and Back Camera: This allows the user to use face and world tracking at the same time. A user could then interact with the world using their face – or you can imagine a recreation of a virtual face that another AR user could see to make the experience even more immersive.

World-Class Rendering: by blending the virtual objects in the scene with the real world objects, RealityKit ads reality physically-based materials, environment reflections, grounding shadows, camera noise, motion blur, and several other technologies to make the virtual content look like it is part of the real world. “…making virtual content nearly indistinguishable from reality.”

Collaborative Sessions: This technology enables multiple developers to be working on the same shared world map. It also enables a group of users to be updating a shared world map at the same time and sharing the information between all the devices in the shared world. This makes multiplayer games far more engaging and creates a much higher fidelity experience with better object anchoring and tracking.

Multiple Face Tracking: Face detection has been a feature for the iPhone’s back camera for years – but only for taking photos. However, that technology did not enable things like Animoji or FaceID. The true-depth camera was required to enable Animoji and now the True Depth camera can track up to 3 faces in real time to create those Animoji effects for a group instead of just 1 person.

Shared AR Experiences: Solving the networking problem of a shared AR experience is no small feat, but Apple did that for developers. Developers can no leverage RealityKit’s API to easily enable shared AR experiences without worrying about any of the development overhead of creating a per-to-per network. Apple says their API helps maintain consistent state of the shared world, optimizes network traffic, handles packet loss, and performs ownership transfers all out of the box.

Enhanced Object detection: – You’ll now be be bale to detect up to 100 images at a time. , This detection includes obtaining information about the size of the image. There has also been advancements in 3D-object detection, making it easier to recognize objects in complex environments. And without wanting to miss an opportunity to talk about machine learning Apple announced that, machine learning is now being used to detect planes in the environment much faster than before.