Leading up to the launch of the Oculus Quest, the head of Oculus third-party content told the Press that porting higher-end PC titles to the standalone platform was less complicated than most were anticipating. The work to be done was simply changing the format of visual and 3D assets, rather than optimizing the code that ran in the game engine.

As some real-world proof that this guidance was correct, the VR Developer Immerse has demoed exactly how they were able to follow the guidance of Oculus to Optimize their visual assets and port a higher-end PC training App the the Oculus quest in only five weeks.

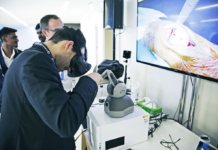

Immerse is the developer of a training app used by DHL for new warehouse employees. The previous app was used while trainees were weathered to a computer. The primary objective was to go teether-less with the training while maintaining the same look and feel as the tethered experience.

The appeal of having an untethered experience for warehouse training doesn’t need explanation – the benefits are clear. With the insidious tracking available on the Oculus Quest, walking the floor of a virtual warehouse becomes more immersive than any hi-resolution tethered experience could ever provide.

But, the goal of Immerse was to come as close as possible to the same visuals as the tethered experience, and for most everyone that has experienced the training the visuals are indistinguishable between the Oculus Quest and the previous Tethered solution

Immerse has been working with the Quest Development Kit since early 2019 trying to have the port ready for the launch of the Oculus Quest headset in May 2019.

The challenge seemed daunting, and the guidance from Oculus leadership that the same assets from the rift could be used for the quest with some asset reforming didn’t seem to reduce the anxiety Immerse product leadership had – but they proceeded to dig into their DHL training app to see what optimization and asset reformatting could be done to get things working on the Oculus Quest.

Some of the asset reformatting was achieved by leveraging some of Unity’s built-in setings. Other adjustments required a little more time and attention. Understanding the target hardware and the formats that performed well natively was the biggest discovery that made porting from the Rift to the Quest fast.

For example, the texture format that Immerse was using for the Rift deployment made every thing look blurry on the Quest. Upon discovering that the GPU-native format RGBA 32-bit was optimized on the Quest hardware and if the assets were reformatted to the GPU-native RGBA 32-bit the quality of the visual didn’t degrade when moving assets to the Quest.

Some additional tweaks to individual assets were also were pursued to reduce unnecessary GPU/CPU cycles on the Quest. An example of this was reducing shadows, texture variations, and lighting. Reducing or removing these environmental elements help, but it can be frustrating when an artist has worked hard on immersion and put in a good amount of work to capture textures and lighting that recreate a specific scene. However, reducing some of these visual effects strategically can make a massive difference in performance – especially in a processor intensive application like a VR Training environment.

Now, porting a highly visual experience to the Quest is something that will required a great amount of care – and potentially take more than the 5 weeks that Immersive took to port their DHL VR Training application. But, it’s encouraging to see that there is a path to porting titles form other VR Headsets to the Quest in very little time.

Reducing polygon counts, reducing total objects in the scene, and pre-baking as much lighting as possible are among the other optimizations that Immersive had to do to achieve their final port to the Quest.

These sound like a big number of compromises that strike at the core of what VR is supposed to be. Essentially, the port required the team to make the training experience less visually realistic to enable an untethered experience that allowed the trainee to walk around a real warehouse to interact with digital objects in VR.

These changes sounds like a real-life training environment might be a little safer to traverse than wearing a fully-occluded headset that is doing a sub-par job at recreating the physical world digitally.

Real-time lighting was eliminated for nearly everything in the VR Training experience. This change alone had one of the biggest performance boosts.

Object count reduction was also something that helped a great deal. The team would take the floor and anything that was permanently attached to it and turn them all into a single object. That way the Quest didn’t have to individually render several objects, just the single odd-shaped object (floor + table + barrel + chair + etc..).

The conclusion that Immerse came away with was that the Quest has just enough power to deliver a compelling VR experience as long as the developer of VR Titles know how to properly leverage the available compute and memory. The Quest will not be forgiving to VR Titles that aren’t optimized for its constrained hardware.