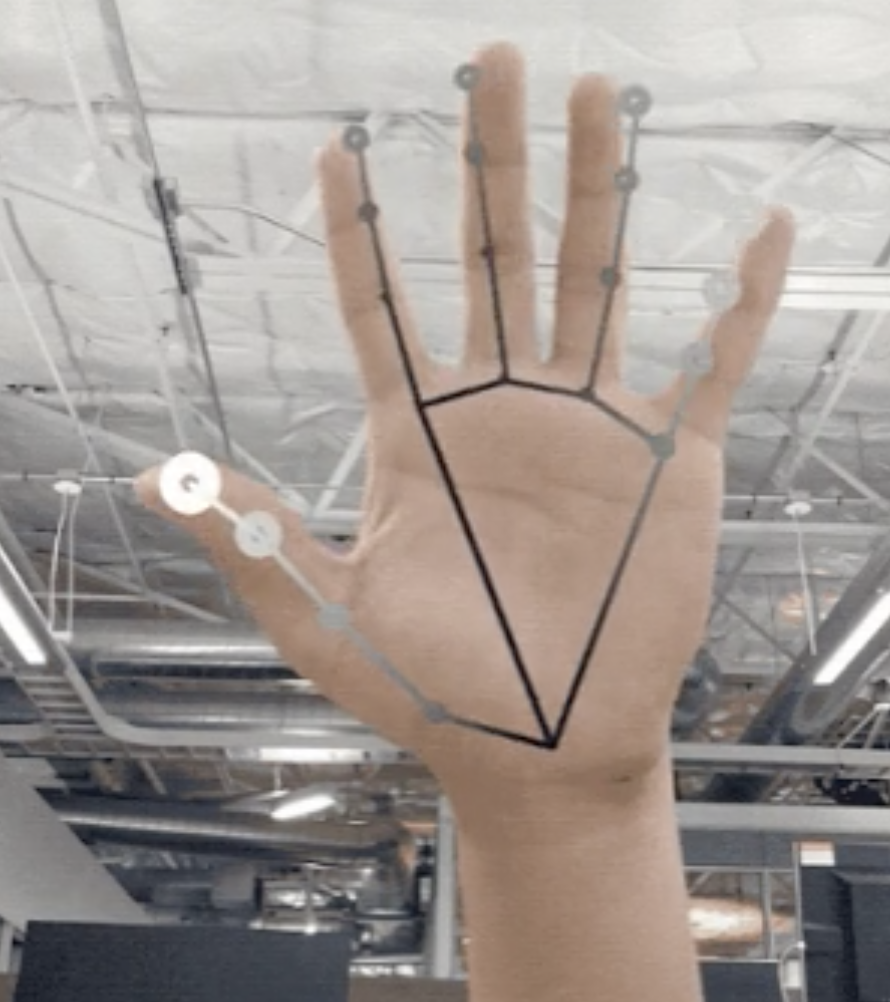

Google is planning to release an open source algorithm that can produce 21-point hand tracking. The system is part of the Google MediaPipe which is a marquee learning solution that also includes face detection, object detection, and even hair segmentation. This may seem like a very cool mobile device feature for the future, or it could mean much more.

When you put a virtual reality headset on, the first thing you want to do is interact with the world around you. Like a 3 year old, we become familiar with the world around us by touching and feeling. If you are new to VR, you are going to have to get used to the controllers that will be glued to your hands throughout the entirety of your experience. Any VR controller will do a fine job at representing your hands in a virtual world, but the best representation of your hands, is your hands. Although there have been rumblings around the web about FaceBook making a hand tracking camera, it seems Google was the first to the party.

For non-gaming VR experiences, it seems like controllers are much more of a chore than anything else. If you typically user your hands when you talk to people, you are going to have some difficult moments in Rec Room VR as you try to communicate with your virtual buddies. If hand tracking were able to cut the entire need for controllers out of our virtual lives, everybody inside and out of the industry would accept it with open arms and a smile.

In the blog post, Google doesn’t mention anything about virtual reality, although Google is known to still be experimenting with a virtual reality headset that could possibly rival the Oculus Quest in mobility and simplicity. Google did in fact mention the idea of this being available on mobile phones and not only on desktops. Fan Zhang states that “Whereas current state-of-the-art approaches rely primarily on powerful desktop environments for inference, our method achieves real-time performance on a mobile phone, and even scales to multiple hands. Robust real-time hand perception is a decidedly challenging computer vision task, as hands often occlude themselves or each other (e.g. finger/palm occlusions and hand shakes) and lack high contrast patterns.”

This is not only the possible of virtual reality hand tracking, but could be used for much more helpful things in the real world. Sign language in particular could be something that benefits greatly by this. FaceTime and Skype is nearly impossible for people with hearing disabilities, and this type new technology is something that could be used by millions across the globe. There is so much good in the world that VR is helping with right now, and this technology can turn right around and help the VR world as well.

The blog post also speaks about what gestures are accepted saying “On top of the predicted hand skeleton, we apply a simple algorithm to derive the gestures. First, the state of each finger, e.g. bent or straight, is determined by the accumulated angles of joints. Then we map the set of finger states to a set of pre-defined gestures. This straightforward yet effective technique allows us to estimate basic static gestures with reasonable quality. The existing pipeline supports counting gestures from multiple cultures, e.g. American, European, and Chinese, and various hand signs including “Thumb up”, closed fist, “OK”, “Rock”, and “Spiderman”.

We are excited to see where this technology goes in the future. Will it make it to communicating apps? Will it make it to VR headsets? That is uncertain right now, but we are certain it will be implemented in the near future.