The time has come for Apple to dominate the AR and VR environments. Whatever Apple makes, it seems to be a hit. The AirPod Pro’s are sold out everywhere. The iPhone 11 is the highest-selling smartphone on the market. The newest MacBook Pro is one of the most powerful laptops in the world. Apple seems to be shifting their focus to AR and VR though, and their latest patent shows that.

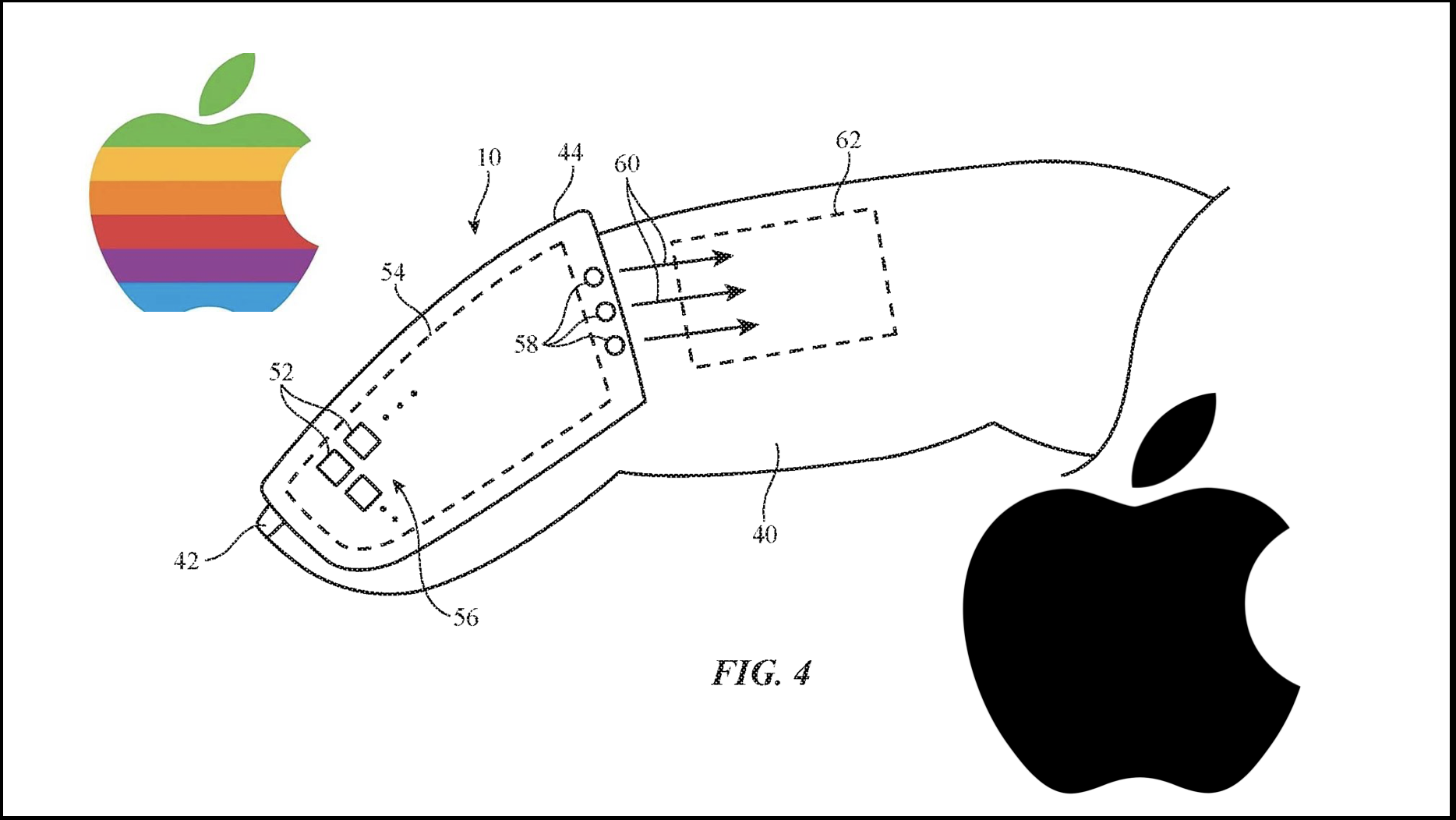

In a patent that was published on the 23rd of January 2020, Apple claims the benefits of finger devices. What are finger devices according to Apple though? Well, there is a lot to this wearable device. This is a long patent and we are going to break it down for you.

(This patent was filed in July of 2018)

Background on Idea

The need to control a virtual or augmented reality is always going to be present. Even in 20 years, the need to control will be there. Whether it be with our eyes or with Apple’s new finger devices, the input will always been there.

In the background on the patent, Apple discusses how input is usually paired with haptics, and that computers need some type of input. They reference the past ways of input as they “may not be convenient for a user, may be cumbersome or uncomfortable, or may provide inadequate feedback.”

In short, Apple knows that whatever they release next, it will need a ground-breaking way of input.

What Are Finger Devices Used For?

Apple would use the finger devices for input, but input for what? Its not secret that the company is working on some sort of HMD. This patent gives us a little more information. Let’s break down what we know.

We will be giving a headline with what we know, how Apple referred to it, followed by a description on how it would impact a headset going forward.

Eye Tracking

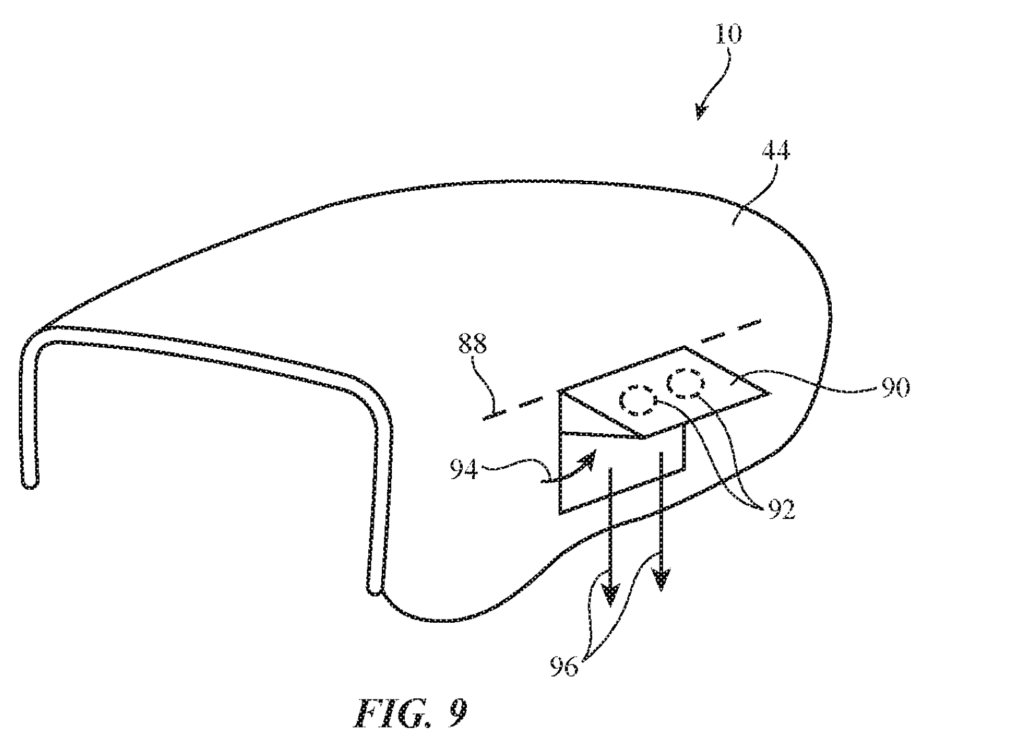

The device that is being controlled by the finger devices will have a gaze tracker. They say that “further comprising a head-mounted support structure in which the display is mounted, wherein the gaze tracker is mounted to the head-mounted support structure.”

This means that whatever this headset looks like or is operating for, it is going to use eye tracking in an intelligent way. Moving your display to where you are looking could be important, and even the more traditional uses of eye tracking could be very important. This would include an extra input option as well.

Content Overlay

“11. A head-mounted device operable with a finger device that is configured to gather finger input from a finger of a user, comprising: control circuitry configured to receive the finger input from the finger device; head-mountable support structures; a display supported by the head-mountable support structures; and a gaze tracker configured to gather point-of-gaze information, wherein the control circuitry is configured to use the display to display virtual content that is overlaid on real-world content and that is based on the point-of-gaze information and the finger input.”

This means that the headset referred to isn’t for sure a VR headset. In fact, this sounds much like an AR headset. Aside from the Lynx headset set to debut next week, we don’t know too many VR headsets that double as AR headsets.

Input and Haptics

“7. The system defined in claim 1 wherein the finger device includes a sensor configured to gather finger pinch input and wherein the control circuitry is further configured to use the display to display the virtual content based on the finger pinch input.”

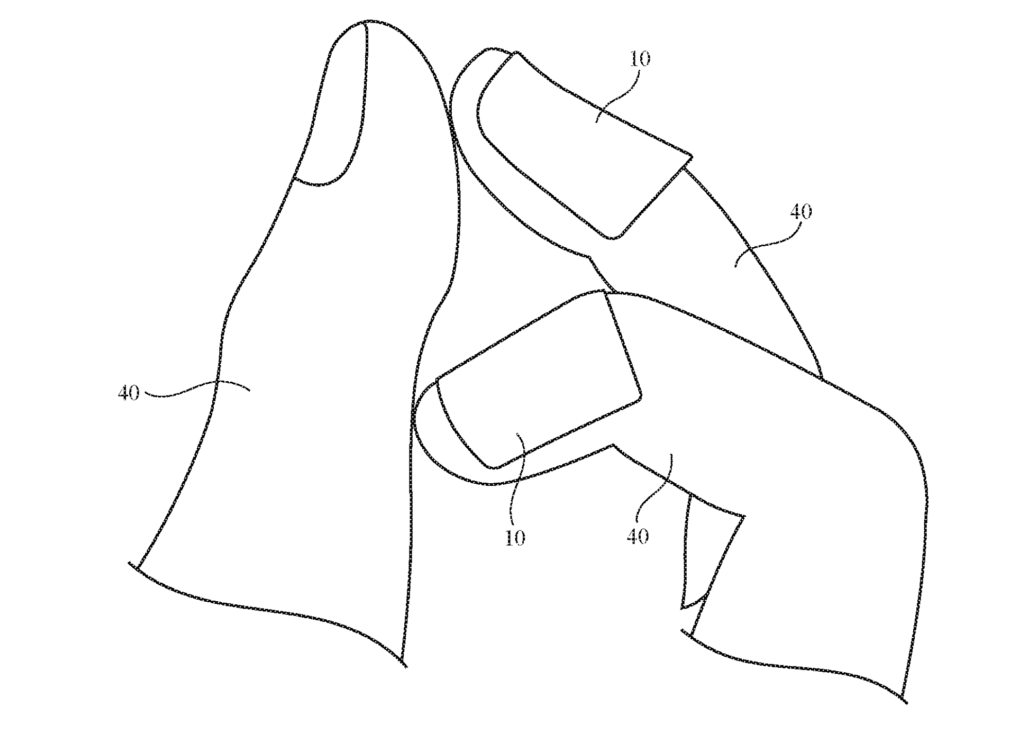

The input, like any other headset or computing device, is going to change what you are seeing on the screen. Notice they do say “pinch” input though. It is clear that it could be worn on more than one finger. It is also important to know that this could very well replace the idea of finger tracking. Humans naturally make precise and small movement with their fingers, and detecting that with a VR or an AR headset could be very hard within the next few years.

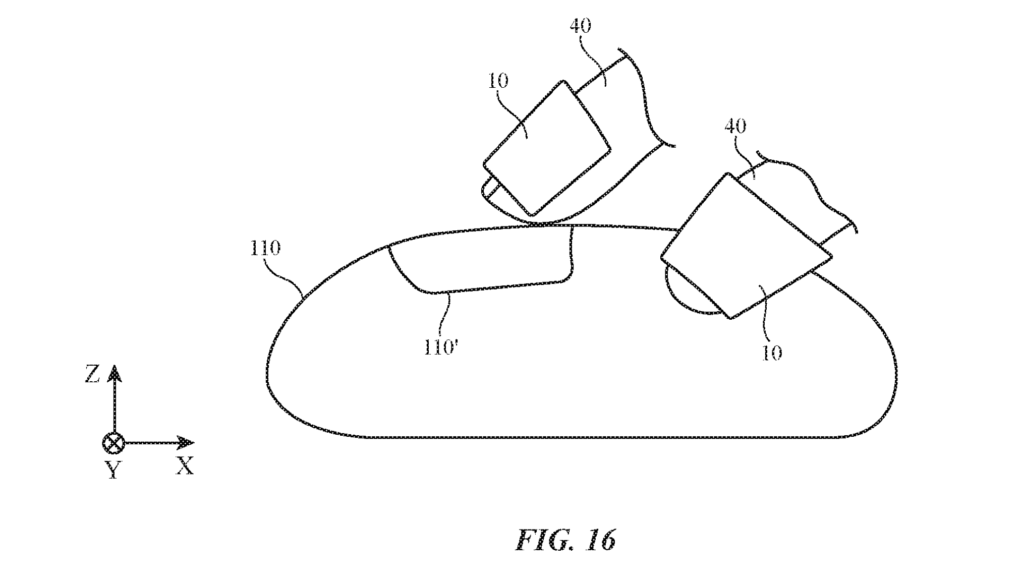

“15. A finger device operable with a head-mounted device that is configured to display virtual content over a real-world object, the finger device comprising: a housing configured to be coupled to a finger at a tip of the finger while leaving a finger pad at the tip of the finger exposed; a haptic output device coupled to the housing; sensor circuitry configured to gather finger position information as the finger interacts with the displayed virtual content; and control circuitry configured to provide haptic output to the finger using the haptic output device based on the finger position information.”

This one is a little more tricky to understand. In short, the content that could be shown from the headset is going to be interacted with by the wearable. That might seem obvious, but there is an idea in here that could thrive. A virtual/augmented keyboard. The haptic feedback making it feel like keys, the constant interaction with it, and the exposed tip all make sense.

This keyboard idea is supported by the following two points.

“16. The finger device defined in claim 15 wherein the sensor circuitry includes an inertial measurement unit configured to gather the position information while the user is interacting with the real-world object that is overlapped by the virtual content.”

“17. The finger device defined in claim 15 wherein the sensor circuitry includes strain gauge circuitry configured to gather a force measurement associated with a force generated by the finger as the finger contacts a surface of the real-world object while interacting with the displayed virtual content.”

In the summary, paragraph 0009 says that “In some arrangements, a user may interact with real-world objects while computer-generated content is overlaid over some or all of the objects”

It is also important to notice that they want haptics included. If you are pounding away on a wooden desk without any feedback for hours, you’ll not only be typing too hard, but you’ll also not be able to feel where each individual key is. Apple isn’t the first company to think of an AR/VR keyboard. But if thats the route they go, they’ll master it before any other company could release a competing product.

This would all make sense, but why do you need a keyboard?

Why the A14 Chip Matters

Having a virtual keyboard is cool, but what could it be used for? Well if you are familiar with how powerful the A14 chip is going to be, you could get a few ideas.

A mobile workstation is something that could come out of all of this. The ability to toss this headset on and start working without any hardware that you need to take with you. This is something that would appeal to millions across the globe. It would enable you to work from home, on the road, and anywhere else with a flat surface. Of course, you would need your finger devices with you.

If Apple is able to harness their mobile computing power for something of this nature, it would change the way business use virtual and augmented reality. Right now, many that use MR tech is using it to create objects. There is a wide variety of businesses that don’t have a VR headset, but this would completely change that.

Whether you are simply typing or you are working on creating new digital designs, the headset will be capable. These finger devices are only the start of the augmented and virtual empire that Apple will eventually build. It seems as if they are already lightyears ahead of the competition, withstanding the fact they have yet to release anything.

For more VR news and community updates, make sure to check back at VRGear.com.